Research

My research encompasses various facets of statistics and optimization. Below is a list of my publications. You are welcome to contact me if you are interested in one or more of my papers. I am happy to discuss!

Names of my students are underlined.

Preprints and paper in progress

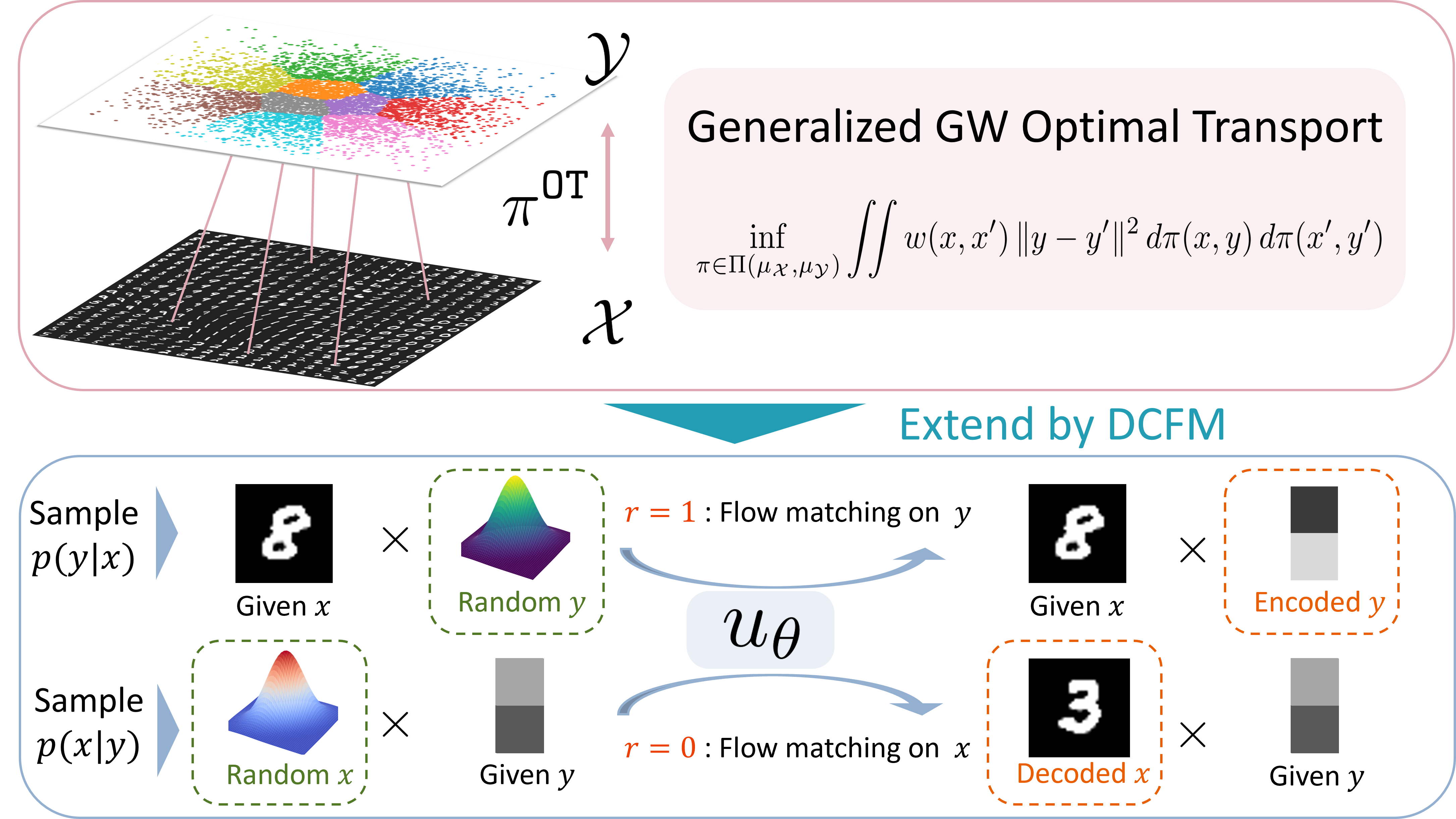

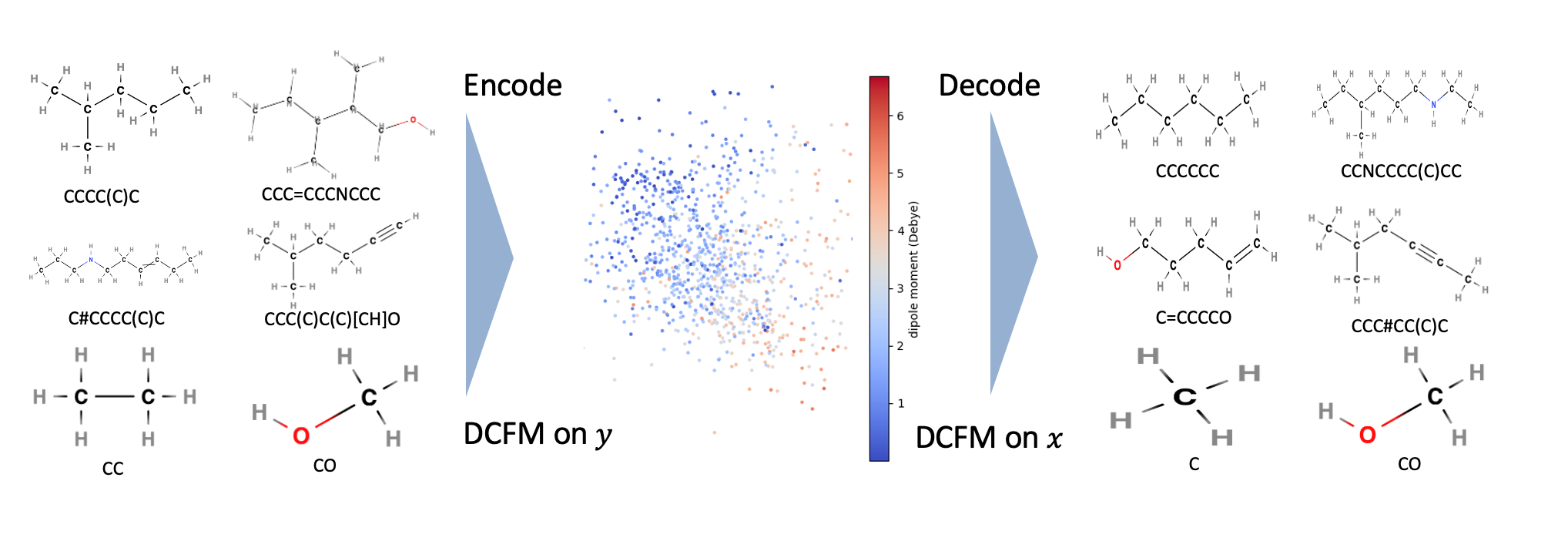

[In submission] Coupled Flow Matching Wenxi Cai, Yuheng Wang, Naichen Shi, 2025. Link.

Highlights

[In submission] Domain Generalization: A Tale of Two ERMs Yilun Zhu, Naihao Deng, Naichen Shi, Aditya Gangrade, Clayton Scott. Link

[In submission] ALBATROSS: Cheap Filtration Based Geometry via Stochastic Sub-Sampling Andrew J Stier, Naichen Shi, Raed Al Kontar, Chad Giusti, Marc G Berman. Link

[In submission] Heterogeneous Matrix Factorization: When Features Differ by Dataset Naichen Shi, Salar Fattahi, Raed Kontar. Link

Journal papers

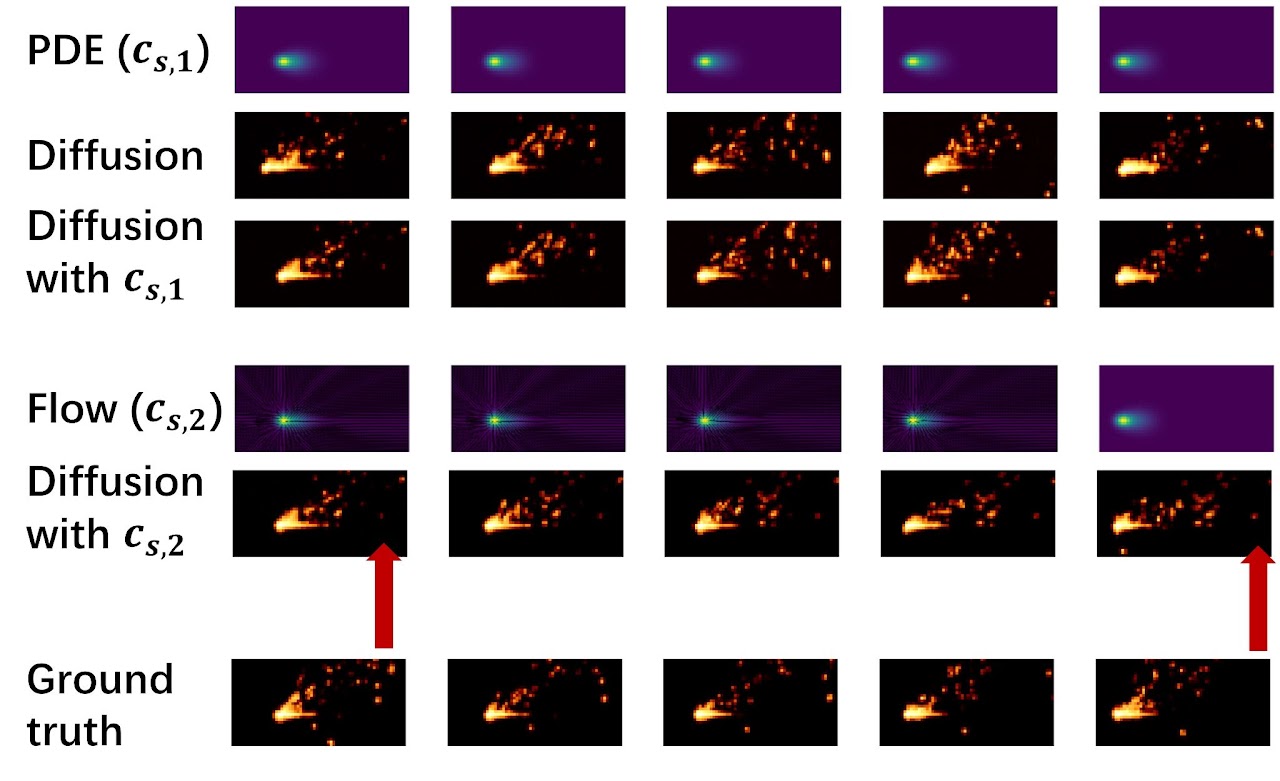

[IEEE T-ASE] Diffusion-Based Surrogate Modeling and Multi-Fidelity Calibration Naichen Shi, Hao Yan, Shenghan Guo, Raed Kontar. IEEE Transactions on Automation Science and Engineering, 2025. Link, Code.

Highlights

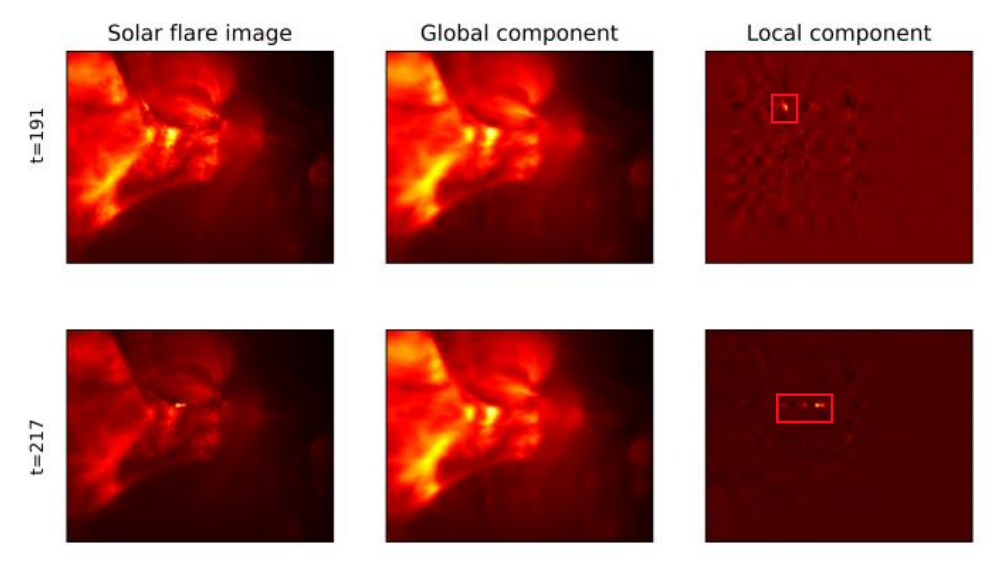

[JMLR] Triple Component Matrix Factorization: Untangling Global, Local, and Noisy Components Naichen Shi, Salar Fattahi, Raed Al Kontar. Journal of Machine Learning Research (JMLR), 2024. Link

[Technometrics] Personalized Tucker Decomposition: Modeling Commonality and Peculiarity on Tensor Data Jiuyun Hu, Naichen Shi, Raed Kontar, Hao Yan. Technometrics, 2024. Link

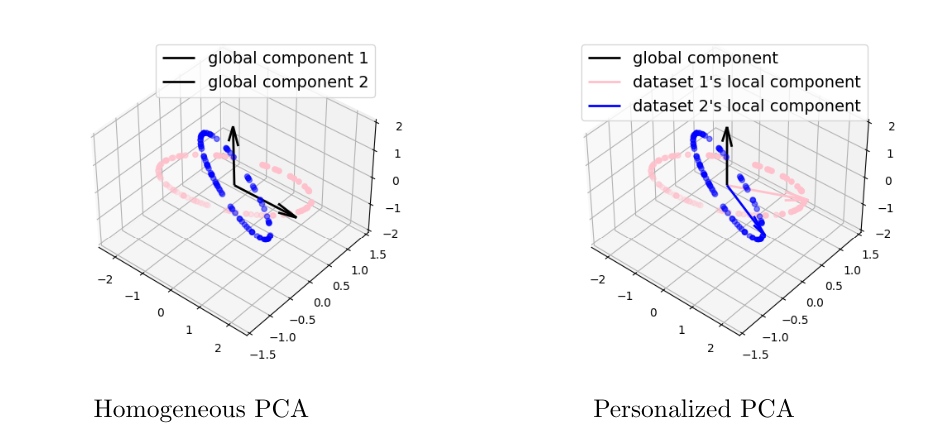

[JMLR] Personalized PCA: Decoupling Shared and Unique Features Naichen Shi, Raed Al Kontar. Journal of Machine Learning Research (JMLR), 2024. Link, Video, Code.

Highlights

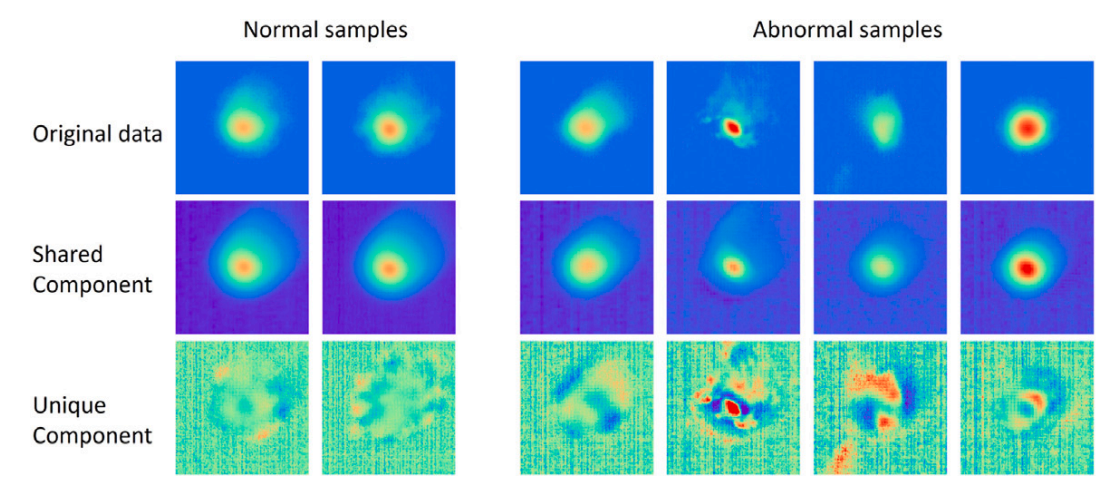

[JMS] Personalized feature extraction for manufacturing process signature characterization and anomaly detection Naichen Shi, Shenghan Guo, Raed Al Kontar. Journal of Manufacturing Systems, 2024. Link.

[Technometrics] Personalized Federated Learning via Domain Adaptation with an Application to Distributed 3D Printing Naichen Shi, Raed Al Kontar. Technometrics, 2023. Link, Video, Code.

[IEEE T-ASE] Fed-ensemble: Ensemble Models in Federated Learning for Improved Generalization and Uncertainty Quantification Naichen Shi, Raed Al Kontar. IEEE Transactions on Automation Science and Engineering, 2022. Link, Code.

[IEEE Access] The Internet of Federated Things Raed Kontar, Naichen Shi, Xubo Yue, Seokhyun Chung, Eunshin Byon, Mosharaf Chowdhury, Judy Jin, Wissam Kontar, Neda Masoud, Maher Noueihed, Chinedum E. Okwudire, Garvesh Raskutti, Romesh Saigal, Karandeep Singh, and Zhisheng Ye, IEEE Access, 2021. Link.

Conference papers

[AISTATS] Calibrated Principal Component Regression Yixuan Florence Wu, Yilun Zhu, Lei Cao, Naichen Shi. Twenty-Ninth Annual Conference on Artificial Intelligence and Statistics (AISTATS), 2026. Link

[NeurIPS] Inv-Entropy: A Fully Probabilistic Framework for Uncertainty Quantification in Language Models Haoyi Song, Ruihan Ji, Naichen Shi, Fan Lai, Raed Al Kontar. The Thirty-ninth Annual Conference on Neural Information Processing Systems (NeurIPS), 2025. Link

[NeurIPS Spotlight] Personalized Dictionary Learning for Heterogeneous Datasets Geyu Liang, Naichen Shi, Raed Al Kontar, Salar Fattahi. Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS), 2023. Link, Code.

[MSEC] Process Signature Characterization and Anomaly Detection with Personalized PCA in Laser-Based Metal Additive Manufacturing Naichen Shi, Raed Kontar, Shenghan Guo. Proceedings of the ASME 2023 18th International Manufacturing Science and Engineering Conference, 2022. Link.

[NeurIPS Spotlight] Adam Can Converge Without Any Modification On Update Rules Yushun Zhang, Congliang Chen, Naichen Shi, Ruoyu Sun, Zhiquan Luo. Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS), 2022. Link.

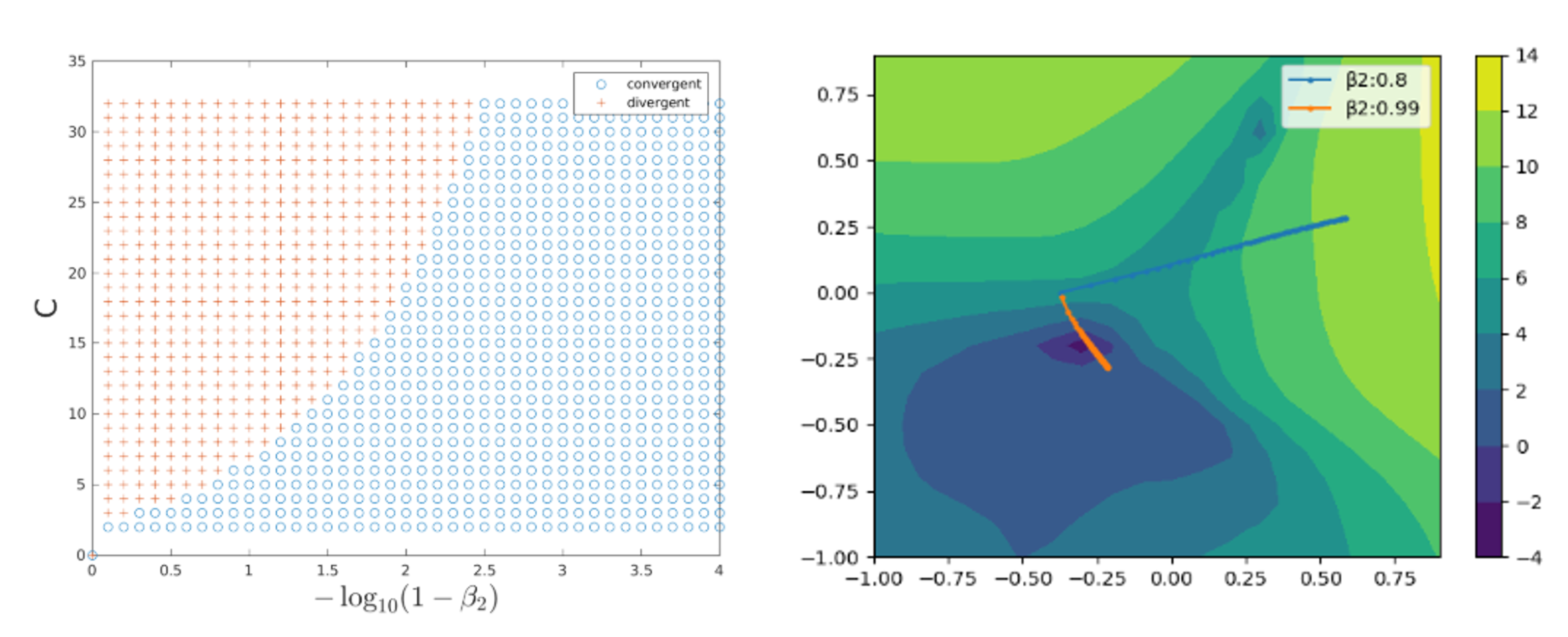

[ICLR spotlight] RMSprop converges with proper hyper-parameter Naichen Shi, Dawei Li, Mingyi Hong, and Ruoyu Sun. International Conference on Learning Representations (ICLR), 2021. Link, Video, Code.

Highlights

You can also check my Google scholar profile.